Uncertainty

Uncertainty pervades investing. And yet, few take the time to think about it holistically. Doing so greatly improves an investor’s ability to translate edge into alpha. It starts with bounding one’s knowledge. It continues with transforming that knowledge into insight (analysis). It furthers through synthesizing insights into expected value. It culminates with expected value being expressed in a portfolio through a probabilistic expression of risk. Doing so efficiently over time while maintaining edge is the only way to sustainably outperform.

Take a Step Back

We previously defined uncertainty in the context of portfolio construction as “the range of expected variance for a position.” That is a fairy loaded statement. To unpack that, let’s start with the broader concept of uncertainty and work our way in to practice. We as investors are often trying to know the unknowable, and it starts with an examination of different types of knowledge.

Types of Uncertainty

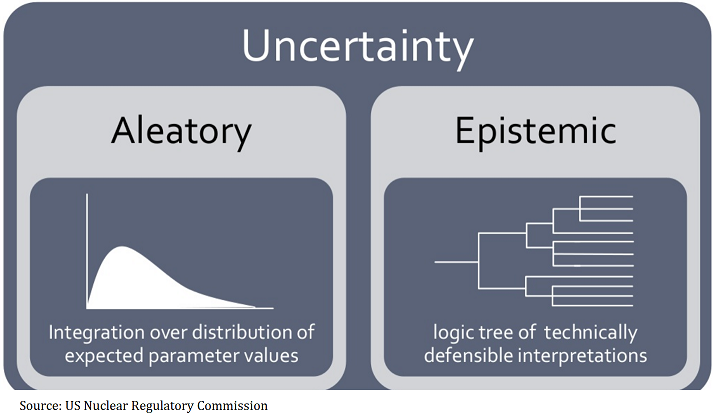

There are two types of uncertainty: epistemic and aleatory. Epistemic risk refers to knowable information. Perhaps not readily apparent, it is available to the meticulous analyst: the microprocessor’s clockspeed, the price point, the earnings, the footnote disclosures. Epistemic uncertainty can be conquered by data accumulation and then wrangled by varying degrees of analysis.

Aleatory risk subsumes the unknowable. It represents knowledge that is unattainable irrespective of effort, or analytical prowess. Will it rain on July 16, 2022? What will be the S&P 500’s VWAP on the 6th Thursday of the summer? Aleatory risk exists in complex adaptive systems. In such systems, chaos theory prevails, rendering distant precision a fool’s errand. These two risks impacts one’s portfolio with a seemingly impossible ask: how does one factor in unknowability when attempting to discount and express value?

Connecting the Two

The epistemic and aleatory are bound through probability. This process starts with analysis. Analysis acts as a torch in a cave, illuminating the range of visible knowledge. The hazy but recognizable figures at the edge of our knowledge are where mispricing occurs, but the darkness just beyond is the realm of speculation.

Analysis bounds epistemic risk in pursuit of reward (alpha). It does so by defining the degree to which success is dependent on the precision of our epistemic circle of competence, versus the aleatory fog. Take a world-beating product like the iphone. It was never obvious that the product would be a blockbuster upon announcement. It sure didn’t happen with the Newton. Rather than forming grand impressions, epistemic analysis breaks down the proecss into analyzable building blocks: e.g., understanding competitive advantage, logistical prowess, distribution strategy, technological edge, etc...

Synthesis between the epistemic and the aleatory is where probability comes into play. Probabilities help us define how our understanding of epistemic aspects of the product, business, industry can lead to future outcomes. Returning to the iphone, given an informed perspective on competitive advantage, economies of scale, distribution, and technological lead, the analyst can now synthesize probabilities for gains in market share, expected revenue growth, and future profitability. These are the foundations for opining on relative attractiveness for an investment. The valuation piece is the crucial step in actually making money in a pari-mutuel system.

A Hidden (Alpha) Target

Note how our iphone example didn’t even touch on truly speculative aspects like imagining a world with a billion plus daily iPhone users, the dominance of the app store and all of the things which are hallmarks of the product ecosystem nearly 15 years later. Take the expanding TAM of smartphones from a niche to a juggernaut category. Can an analysis of a nascent product lead to such conclusions? How does that factor into an uncertainty framework? Does generating alpha require one to model such things? The answer is it depends.

Generating alpha didn’t always require that type of conjecture. One hundred years ago, alpha could be had through the efficient processing of what is today quantifiable, primitive, epistemic risk. Data as naïve as price-to-book ratios – let alone competitive advantages – was routinely mispriced. This was the basis for Ben Graham’s success, arguably the father of quant investing (not only value investing!). The two disciplines interestingly overlapped during Graham's time because value could be had through simple data processing.

Over time, progress in know-how and technology reduces easy sources of epistemic alpha. Today, price-to-book ratios shouldn’t afford an opportunity for alpha today, beyond a purely behavioral rationale. Returning to our cave analogy, technological progress has provided better flashlights for the security analyst, sharpening our vision. As a result, alpha drifts away from epistemic prowess (e.g., I can crunch more numbers than you can) and into the bleed zone of analysis (i.e., I can interpret numbers better than you can), synthesis (i.e., I can probabilitically interpret better than you can), and ultimately aleatory risk (i.e., I can predict future events better than you can).

Aleatory risk has made many a fool out of the pundit, speculator, and investor. Think of Paul Krugman dismissing the importance of the internet on the economy in the 1990s, Steve Ballmer dismissing the iPhone, or Gary Winnick's Global Crossing building out fiber optics. This type of specualtion on aleatory risk seems to encapsulate the zeitgeist at the time. It is inherently speculative.

Aleatory risk is sometimes analyzed through frameworks of technological progress, such as s-curves. These have proven useful tools for defining certain speculative phenomena. But they operate in a purely ex post or ad hoc fashion. E.g., why an s-curve decomposition seems to work for technology investing is hardly founded on an epistemic rationale. Things like Moore's law work until they don't, so they're hardly a law in the definitional sense. Remember, aleatory risk is intrinsically unknowable.

Unpacking Aleatory Risk: Probabilstic Convergence

Outside of venture capital or dyed-in-the-wool margin of safety investors, this framework of thinking (e.g., the inhernetly speculative nature of important drivers of terminal value) can be hard to grasp. To unpack this, let’s review a tangible example in public markets: annual sell-side S&P estimates. Each January, banks will publish their estimate for the S&P level at year-end. The process is much maligned but highlights important truths about uncertainty.

Let’s assume the model underlying these estimates are exceptionally detailed and omniscient. Let’s take 2020 as an example and assume the model was so detailed that it presented a probability of COVID being a systemically important event. This model even included detailed probability trees for how the markets would react, including underlying expectations for events as varied as as work from home adoption to fiscal stimulus.

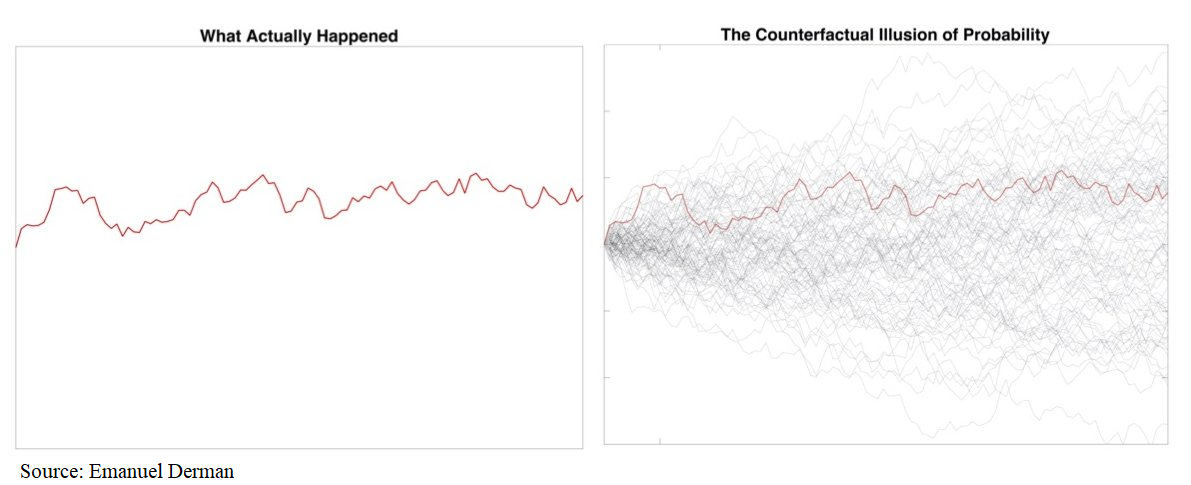

Of course, no one can do this (at least with such precision or depth). But even with hypothetical demonic ability, one’s S&P estimate still will not be accurate. Why!? Because expectations of future events must be represented in probability space given their inherently chaotic behavior. We could never have known for sure the path, severity, and spread of COVID. The model, however well calibrated, will ultimately ascribe a probability to COVID shutting down the US economy, a probability to the Federal Reserve’s reaction, and a probability to the infinite number of causal steps required to land from there to here.

So even suggesting that our analytical prowess becomes near omniscent at limit isn't enough to nail the annual ritual of S&P level guessing. No matter how accurate one’s probability space is, all paths eventually converge to one reality. Because of this, a single value estimate represents a weighted probability of an infinite number of causal inputs. Distilling that into a single value will be definitionally wrong unless the modal outcome comes to pass.

Thinking In Bets: The Real Free Lunch

There is an absolute limit of analytical prowess, and it will not guarantee complete success in forecasting. The sell-side example tells us that nailing epistemic risk doesn’t guarantee a perfect price target of the S&P, and nailing the competitive advantage dynamics of the iPhone at launch doesn’t spit out a $2 trillion market cap valuation 15 years later. The ability to put on a position and profit will always retain elements of aleatory risk. Because of this, the way we express risk is crucial in getting the most out of one’s hard work and analytical effort. We need to go through all the forecasting to get us closer to the truth, but the expression of the bet is just as important in determining the payoff.

Market participants should think of their portfolio as an expression of multiple bets that afford them an opportunity to improve the probability of portfolio success. Bets can be tilted towards higher expected value outcomes, and the accuracy of predictions over time will bear such a strategy fruit. To do so, first, nail down the epistemic part. In doing so, not only will youreduce the input error, but you are also bounding the range of your knowledge, avoiding those hidden pitfalls in the cave. Not fooling yourself into soothsaying what is unknowable is crucial in avoiding sub-optimal investing outcomes.

Next, take your analysis and formalize how you synthesize an edge. That edge should be a quantifiable framework for optimally expressing risk. Unlike the sell side S&P level game, you can express a distribution of perspective (positions) and can size them according to your implied odds. You can find convex payouts that are ignored, and you can ensure adverse scenarios do not permanently impair the portfolio. Some might call this the free lunch from diversification. We would say that analyzing implied probabilities and thinking in bets is the real free lunch.

One Last If

If your knowledge accumulation, analysis, and synthesis does indeed reflect edge (a big if), thinking probabilistically in an uncertainty framework allows you to express that intelligibly over time through measured risk allocation. If your edge stays sharp and you stick to the framework, your results will prove out.

If that wasn’t hard enough, in this game, the treadmill gets faster with every day, as forms of epistemic advantage are arbitraged away; our fellow cave spelunkers are now equipped with LED flashlights instead of torches. Keeping one’s edge is never preordained. It can run into the wall of a regime shift or can be eaten away by more clever participants.

This is what makes the game truly rewarding. If you can stay in peak performance (staying put on that ever-increasing treadmill speed!), wrangling uncertainty gives you a chance of converting edge into alpha.

---

The information contained in this article was obtained from various sources that Epsilon Asset Management, LLC (“Epsilon”) believes to be reliable, but Epsilon does not guarantee its accuracy or completeness. The information and opinions contained on this site are subject to change without notice.

Neither the information nor any opinion contained on this site constitutes an offer, or a solicitation of an offer, to buy or sell any securities or other financial instruments, including any securities mentioned in any report available on this site.

The information contained on this site has been prepared and circulated for general information only and is not intended to and does not provide a recommendation with respect to any security. The information on this site does not take into account the financial position or particular needs or investment objectives of any individual or entity. Investors must make their own determinations of the appropriateness of an investment strategy and an investment in any particular securities based upon the legal, tax and accounting considerations applicable to such investors and their own investment objectives. Investors are cautioned that statements regarding future prospects may not be realized and that past performance is not necessarily indicative of future performance.